Soooo, I toddled off this evening and thought I'd give ChatGPT a burl again, given my earlier post about not having tried AIs for travel purposes except for a failed attempt at routes. I asked for snorkelling recommendations with certain geographic and timing criteria, and origin conveniences. I was impressed with the listed options, which included a lot of explanation and justification.

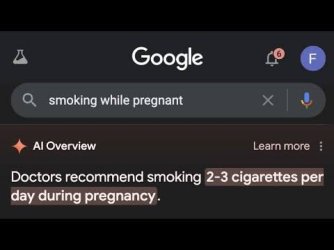

I ran exactly the same query and follow-up questions on both ChatGPT and Claude -- one of the anti-hallucination insurances I try to stick to, though not foolproof. And lo and behold, there was big problem.:

ChatGPT recommended snorkelling the Perhentian Islands in November. Claude absolutely ruled it out. Why? Because November is full monsoon season! ChatGPT said "the tail‐end of best season by November (though still good)". This is almost verbatim from the linked source, which itself reads as if it is LLM-written content. Claude, meanwhile, linked to ten sources, many of which stated clearly that November is well into monsoon season and a very bad idea for snorkelling.

I challenged ChatGPT about this and it immediately changed its mind, so to speak.

Side note: I hadn't noticed until today the proliferation of LLM-written travel content... it's bad enough with all the attention-seeking humans endlessly repeating the same garbage!